Kalev Leetaru, University Fellow, University of Illinois Graduate School of Library and Information Science, gave the keynote presentation, “Pioneers in Mining Electronic News for Research,” at CRL's Global Resources Roundtable “Beyond the Fold: Access to News in the Digital Era”. Leetaru pointed out that technological developments of the past twenty years have revolutionized the means of communication throughout the world. (“Where there is power, there is Twitter”). The scale of media—especially social media—has grown to a nearly imponderable extent. New tools and systems have emerged to mine, store, and make available vast quantities of data for study. And data-computing methods, once only the domain of computer scientists, are now easily accessible to a wide range of researchers.

His major points:

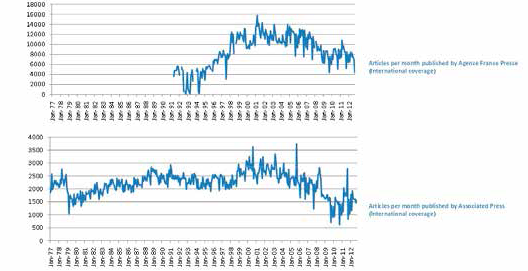

- The volume of data—measured in number of articles available—from mainstream media sources such as The New York Times or Agence France Presse appears to be in a state of gradual and steady decline since the early 2000s. For researchers the explosion of web-based news presents immense opportunities as well as potential pitfalls. Researchers are drawing conclusions based on results from an exponentially increasing base of data. For instance while studies of violent demonstrations around the world suggest there is more instability now throughout the world compared to the mid-1990s, there may also be simply more widespread coverage of events than in decades past. Understanding the data in context—for example, the number of articles covering a particular event relative to the total number of sources and articles available—becomes important. Searching web-based content without a consistent and measurable baseline calls the research output into question.

- Traditional media has a relatively long time horizon, especially compared to the phenomenon of social media. Since the late 1800s, the study of the press and communications has been a perennial focus of historical research. Since the 1960s some new areas of academic research using news have come to the fore:

- Communications focuses not only on what is reported, but also the context and medium of the reporting. Researchers in this area analyze the frequency of specific topics in reporting; reporting content, intensity, and valence; association of words and word patterns; and often perform advanced computational analysis.

- Political communication is a specialized area of communications study, focusing specifically on biases, patterns, and trends in reporting on public figures, candidates, and political themes.

- Political science/Sociology research (which strongly emerged in the 1970s) utilizes coding schemes to categorize and assess the content of news coverage, creating data sets for ongoing research.

- Linguistics research often enlists computer scientists to provide and process large, controlled text corpora, where consistency is necessary to enable replication of findings. (Gigaword from the Linguistic Data Consortium, for example, provides DVDs of news corpora over a set period of time for computational and linguistic analysis).

- Because of the different needs of research in the sciences, social sciences, and humanities, news must be preserved in a variety of formats, both as a “snapshot” of real presentation as well as a text-only set of information. All users, however, need to understand the “completeness” of the archive, e.g., what percentage of original articles from a given source are available. For web archives (born-digital news), we are still learning how researchers want to interact with archival content.

Debora Cheney, Larry and Ellen Foster Communications Librarian for Pennsylvania State University (PSU) Libraries, provided a focused analysis of actual use of news databases. Her presentation, “Use of Traditional News Databases at Penn State University: Trends and Implications,” was based on a study conducted at Penn State University Libraries of student and faculty use of large news databases. Although the survey results are based on usage at PSU, they may suggest larger trends in the use of news at other academic institutions.

Cheney observed that, with the ubiquity of news on the web and the easy availability of the large databases, students don’t necessarily associate libraries with news content anymore. She also noted that increasingly, researchers seek “news” from alternative sources: social media, blogs, and other content that the library does not supply.

Some key findings of the Penn State study:

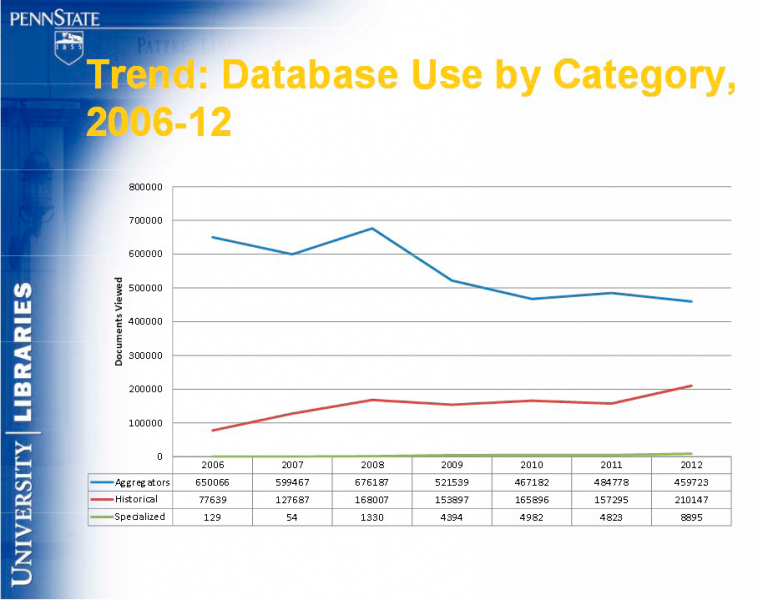

- Overall use of the text-only aggregator databases at Penn State, such as LexisNexis and Factiva, is declining. Also declining is the viewing of articles from major newspapers like The New York Times and Washington Post within these products: The percentage of documents viewed from these key titles shows a steady decrease, from approximately 45% of all use in 2009 to just 13% in 2012.

- Despite the growing presence of international content in the aggregated databases, very few international sources are used to any great extent at Penn State. The exception is certain English-language resources, particularly financial publications, such as the Financial Times. Usage of wire service information (particularly business wires) and television news sources is increasing.

- Usage is generally much lower for historical news sources than for aggregated news, but use may be driven in different ways for historical sources. This use is affected by such factors as curriculum at Penn State and by patterns in deep historical research). Contrary to the aggregator trends described above, however, use of the historical databases seems to be increasing over time.

- New web-based library discovery systems seem to be having an impact on news database use. Resources not linked to discovery systems such as ProQuest’s Summon, Ex Libris’s Primo, and EBSCO Discovery Service must rely on direct knowledge and traffic to their product. Penn State noticed a major shift in use of The New York Times as delivered through ProQuest Historical Newspapers rather than LexisNexis or Factiva after implementing Summon. However, discovery systems bring with them added challenges for news and researchers:

- Including news content with non-news content in discovery systems results in a greater number of search results, but a decline in the number of documents actually viewed.

- Discovery systems do not distinguish “Page One” stories over other lessimportant content, nor do they necessarily weight results depending on the impact or the proximity of the source title (news is inherently a local phenomenon, but a system search may not distinguish between articles on the same subject from local or foreign sources).

- The discovery systems also don’t de-duplicate stories that appear on multiple news sources. Additionally, they don’t search all resources in the same way. As a result, users may opt against using these tools for deep news research.

Cheney’s analysis suggests that librarians can play an important role in helping researchers navigate the complex landscape of access to electronic news databases more effectively. Cheney noted that faculty members strongly influence student opinion on what resources to use, and that they should be encouraged to direct students to the resources provided by their own institution’s libraries.